Image co-visibility as a proxy task

Given the recent success of OpenAI o3 in ARC Challenge, I decided to check the performace of the currently available o1 and 4o models in image matching.

However, asking LLMs to find relative pose or pixel-wise correspondences is pretty stupid (of course, I have tried to do this as well, see the end of this post), that’s why I have focused on the simpler classification task – given two images, tell if they depict covisible areas, or not.

Despite the simiplicity of the task formulation, it is a yet unsolved task, being one of the hardest things in the Image Matching Challenge 2024, because it take a single misregistered pair to ruin your 3D reconstruction.

In a nutshell, methods, based on the global descriptor (such as ArcGeM, DINOv2 + pooling, etc) usually lack in recall and fail under zoom/viewpoint change. And the methods, based on the local descriptor similarity, even with learned matching like LightGlue or RoMA lack in precision and fail for repeated patterns and symmetries.

Recently, there were works tackling a specific instance of this problem - Doppelgangers, and Doppelgangers++ with a task-specific training and the dataset. But this post of not about them.

Summary

Both ChatGPTs are surprisingly good at image co-visibility, although the specialised models like MASt3R and based on it Doppelgangers++ seem to be better at the moment.

The most impressive things to me are the fact that ChatGPT is likely to be never trained for that task, yet exhibiliting very strong performance.

Moreover, its explanations are very human-like, reminding me my own attempt to explain image matching in simple terms

The prompt I gave it, was quite simple:

Hi,

Let's do the following thing - I will give you two images

and you will tell me if they share some common part of the scene, or not.

The 4o answer is:

Based on the two images provided, it appears that they do share a common part of the scene.

Both images include:

- A waterfall-like feature in the background with a stone or rock wall surrounding it.

- A statue in the center of the pool in the foreground.

- A pool of water with rocks and vegetation around its edges.

While the weather and lighting conditions differ between the two images

(one has snow and the other is without snow),

the structural elements of the scene indicate that they depict the same location.

The o1 answer:

It appears they do share the same setting. Both images show the same stone waterfall in the background,

the same pond in front of it, and the same statue at the water’s edge

(though captured from slightly different angles and likely at different times).I will cover more cases with details below.

More examples, some hard, some easy

All the experiments were done within the same session, so I haven’t duplicated detailed prompt.

Famous landmarks

When the location is known to the model, ChatGPT directly uses its information:

4o answer:

These two images do not depict the same location or share common parts of a scene.

- The first image is of Maidan Nezalezhnosti (Independence Square) in Kyiv,

which features a central monument and surrounding urban landscape.

- The second image is of the House with Chimeras, an iconic building in Kyiv

known for its ornate and unique architectural design, located near the Presidential Office.

While both are landmarks in Kyiv, they are distinct locations with no overlapping elements

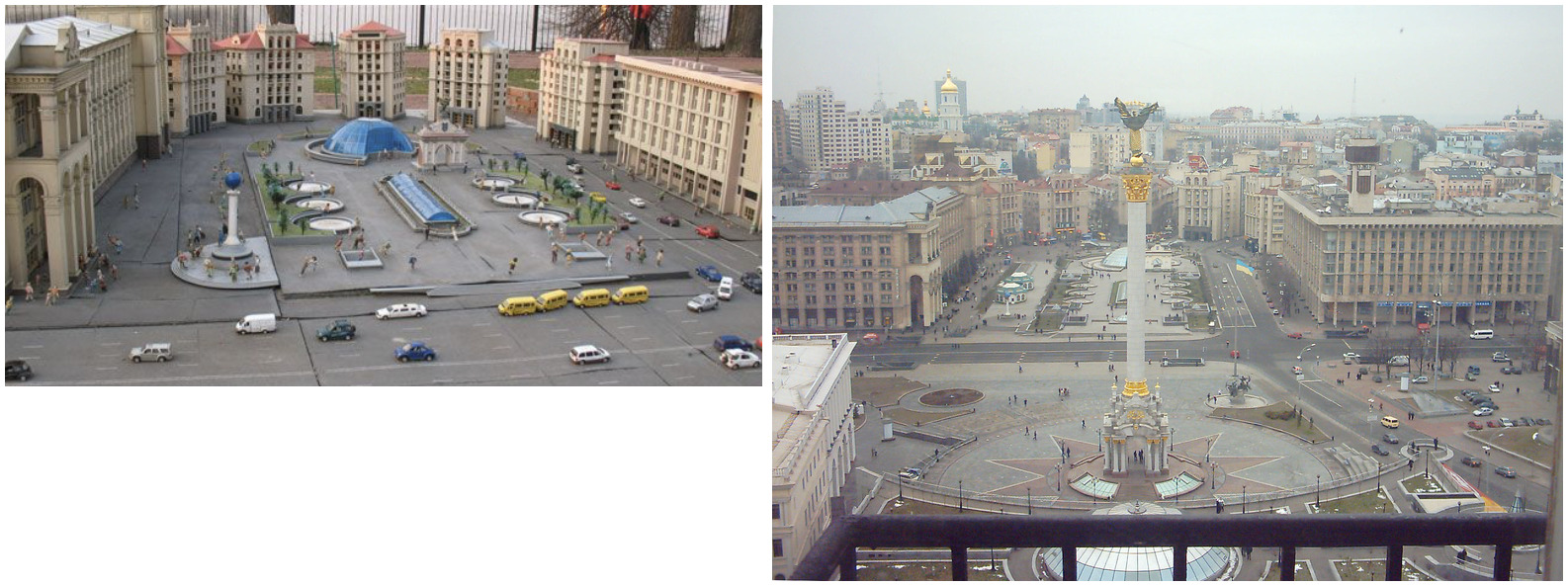

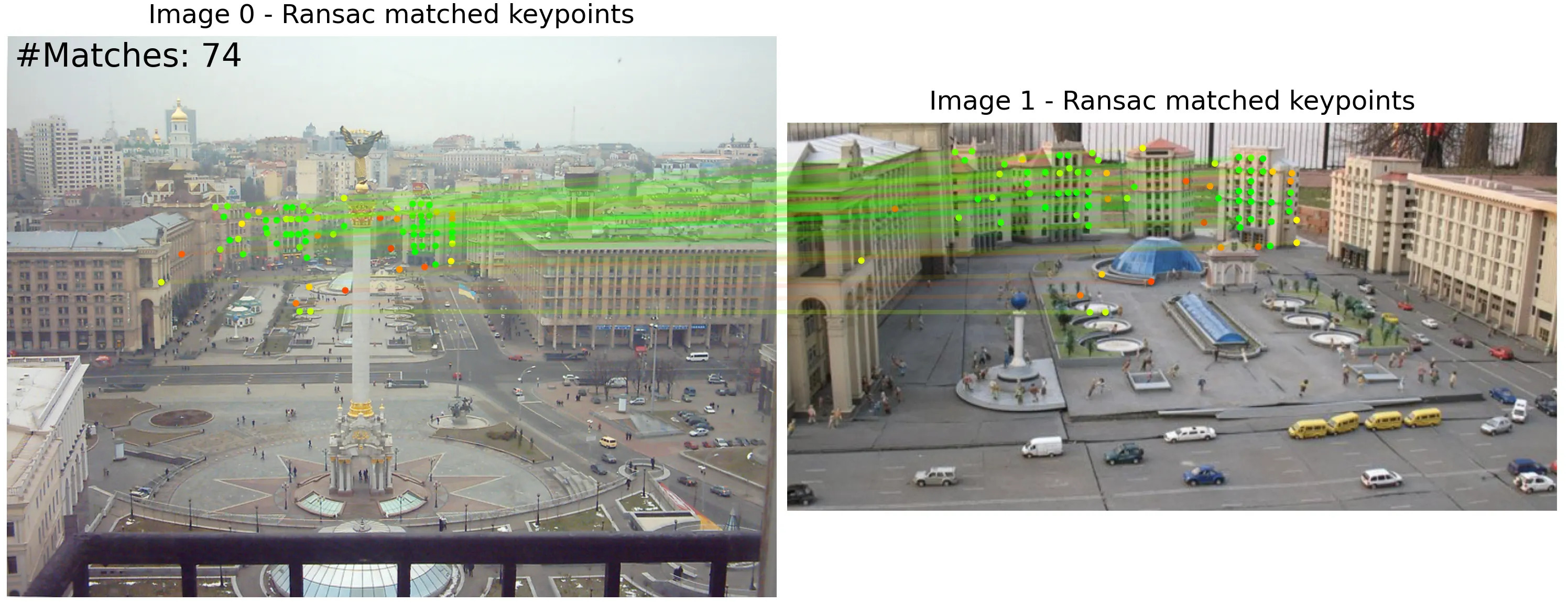

in these particular views.Real photo versus miniature model

Both, o1 and 4o are able match the real object to its miniature, but also point out their different nature:

o1 answer:

Thought about co-visible parts in images for 11 seconds

They depict the same place—Kyiv’s Independence Square (Maidan Nezalezhnosti)—but in two

very different forms. The first is a real, full-scale photo looking down on the square,

and the second is a scale model or miniature re-creation of that same layout

(including the central column with the statue, fountain domes, and the surrounding buildings).This kind of distinction is impossible with classical local feature matching pipeline. Here is DISK-LightGlue-RANSAC, and RoMA-RANSAC matching result, run using Realcat image matching demo.

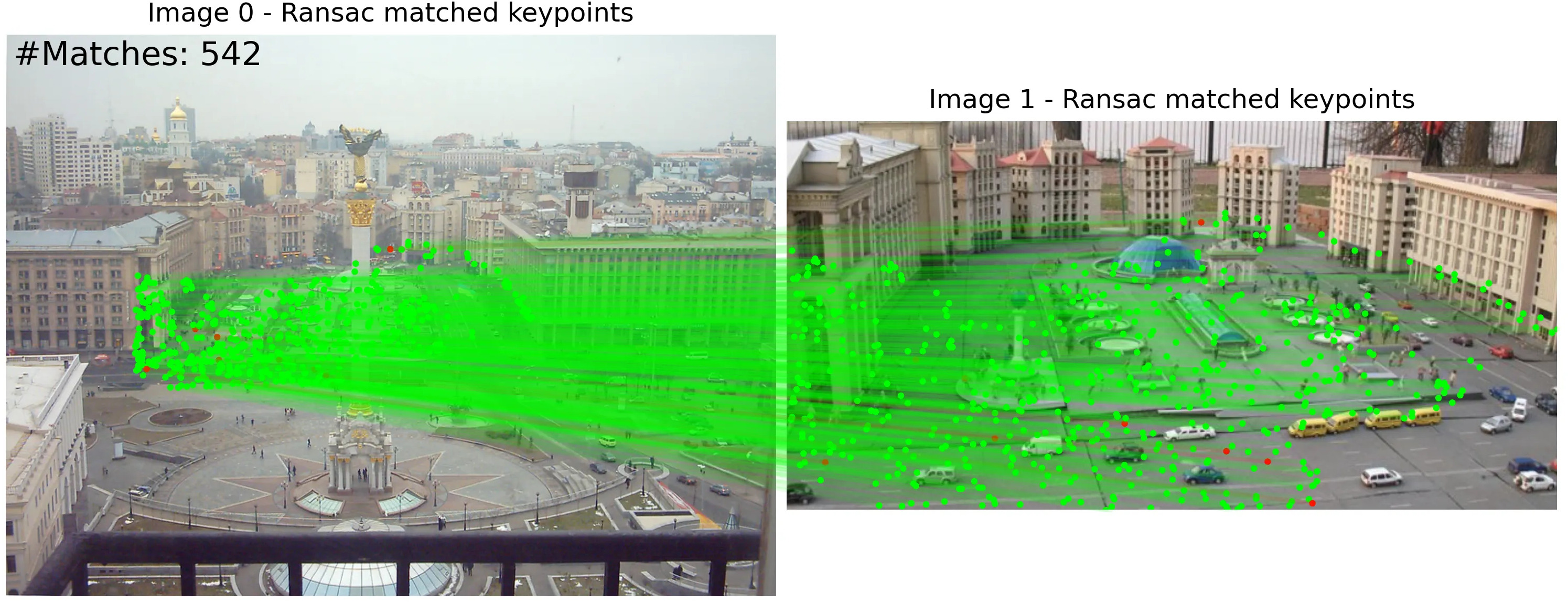

Objects

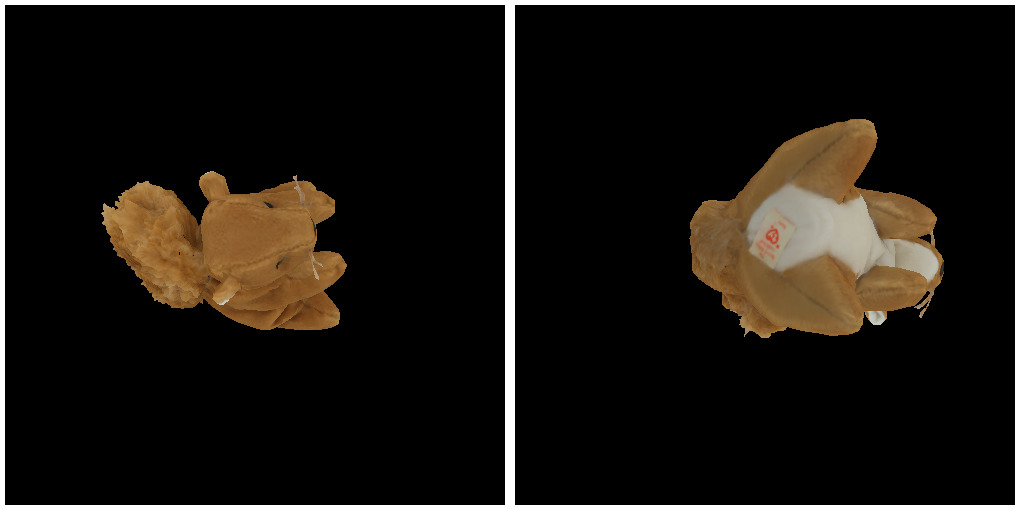

The next thing I have tried, were the objects from Google Scanned Objects dataset.

o1 gave me the following answer

It looks like both photos show the same bundle of plain brown bags,

just viewed from different angles. The label and overall shape match,

so they are likely the same object.

> Now try to answer, if there are same parts of the box shown,

or the two viewpoints are completely disjoint (different sides of the coin are disjoint in that sense)

Even though the second shot is mostly from “above” and the first shot is more of a “front” view,

you can still see parts of the same surfaces (for instance,

the top flap/edge of the package) in both images.

They are not completely disjoint angles—there is overlap

where the top and corners of the package appear in both photos.I was trying to ask for some visualizations, but o1 was not able to do it:

I’m afraid I don’t have the ability to directly generate and attach a new image

file here. However, I can describe how you might create one yourself

(e.g., in an image-editing tool) to highlight the co-visible areas:

Load both images into an editor like Photoshop or GIMP, each on its own layer

(or in two separate windows so that you can work side by side).While 4o have tried to use python for this, although now succesfully:

I have generated the images with color-coded overlays for the approximate

covisible regions. You can download them using the links below, and the codefrom PIL import Image, ImageDraw

# Load the two images

image1_path = "/mnt/data/image_1.png"

image2_path = "/mnt/data/image_126.png"

image1 = Image.open(image1_path).convert("RGBA")

image2 = Image.open(image2_path).convert("RGBA")

# Create overlays for covisible parts

overlay1 = Image.new("RGBA", image1.size, (255, 0, 0, 100)) # Red overlay

overlay2 = Image.new("RGBA", image2.size, (0, 0, 255, 100)) # Blue overlay

# Simulating an approximation of covisible regions

# (mockup as actual analysis is unavailable)

# Approximate region to mark on both images

draw1 = ImageDraw.Draw(overlay1)

draw2 = ImageDraw.Draw(overlay2)

# Assume circular region near the cap for covisibility in both images

# Marking covisible areas

draw1.ellipse([50, 10, 150, 110], fill=(255, 0, 0, 100))

draw2.ellipse([60, 20, 160, 120], fill=(0, 0, 255, 100))

# Combine overlays with original images

image1_with_overlay = Image.alpha_composite(image1, overlay1)

image2_with_overlay = Image.alpha_composite(image2, overlay2)

# Save the output images for visualization

output_image1_path = "/mnt/data/image1_with_covisible_overlay.png"

output_image2_path = "/mnt/data/image2_with_covisible_overlay.png"

image1_with_overlay.save(output_image1_path)

image2_with_overlay.save(output_image2_path)

output_image1_path, output_image2_pathThen I have it a squirrel:

Here is conversation with 4o:

These two images depict what appears to be the same object (a stuffed animal),

but from different angles.

Analysis:

- Similarities: The fur texture, tail, and general proportions

are consistent across both images.

- Differences: The perspective changes the visible features

(e.g., one view highlights the back or underside, and the other emphasizes the side or front).

>Same, or not? Give me a probability if they are the same

Probability Assessment

Given the above observations, I would estimate a 75-85% probability

that these images are representations or variations of the same base

design, considering the consistent features and patterns across both.

However, the exact arrangement and perspective differences make

them not entirely identical.

>You are right, it is the same stuffed animal -- squirrel. Now try to answer,

>if there are same parts of the animal shown, or the two viewpoints are completely

>disjoint (different sides of the coin are disjoint in that sense)

The two images are not completely disjoint; there are some overlapping

parts of the stuffed animal visible in both viewpoints. Here's why:

Overlapping Parts:

- The tail is partially visible in both images, though it is viewed from

different angles.

- The fur texture on the sides and parts of the body overlaps in both images,

though the specific patches might vary.

Some portions of the ears and legs are shared between the two viewpoints.

Disjoint Parts:

- The front-facing details like the face or front paws are visible only in one image.

- The underside or back is featured more prominently in the other image.

Conclusion:

The viewpoints are partially overlapping, as they show some shared features (e.g.,

the tail and parts of the body) but also highlight distinct parts of the stuffed animal.

This is not a fully disjoint "different sides of the coin" scenario.Actually difficult examples from WxBS, EVD and IMC-2024

Now I am going to the some difficult image pairs. The IMC-2024 example was too hard

o1 answer:

They’re definitely photos of the same building (a distinctive white-and-dark-brick

façade with statues and that large clock tower). The first shot is a closer,

more front-facing view of the façade, while the second steps back and off

to the side so you can see the tower and circular window. You can still

spot common elements in both—like the rectangular “niches” with statue

and the pattern of bricks—just viewed from different angles.

>Same building - yes, but are there covisible areas or not?

Yes, there is some overlap. In the second shot (the more diagonal view),

you can still see portions of the same façade panels and statues that appear

front-and-center in the first photo. They’re just seen from an angle rather than straight on,

so there are co-visible areas.4o answer:

These two images depict the same building but from different angles.

Observations:

Shared Features (Covisible Elements):

- The distinctive brick facade with white details and windows is visible in both images.

- The architectural design, including the cross at the top, the large circular clock,

and the unique geometric patterns, is consistent.

Disjoint Features:

- The first image focuses on the tower side of the building with the large circular clock.

- The second image highlights the front entrance with statues above the doorways

and decorative details at the top.

Conclusion:

These images partially overlap in their view of the building's facade and overall

architectural style but focus on different sides. They are not completely disjoint

since the same materials and design elements

(e.g., brick and white accents) are visible in both images.So, both model fail here. Cannot blame them though, all image matching methods fail as well.

Wall with graffity is easy for ChatGPT

4o:

These two images appear to show the same wall of a building, but from different perspectives.

Observations:

Shared Features (Covisible Elements):

- Both images display the same decorative emblem/mural on the building's wall.

- The mural's design, including the scroll-like banner and ornate details,

is consistent across both images.

Disjoint Features:

- The first image shows additional context, such as the parking sign,

street lamp, and part of the surrounding building.

- The second image provides a closer and more vertical perspective,

focusing entirely on the mural and the upper portion of the wall.

Conclusion:

These images are not fully disjoint, as the mural is covisible in both.

However, the first image provides a wider context, while the second image

is zoomed in and tilted upward to focus on the decorative details.o1:

Thought about co-visible areas in images for 13 seconds

They do show the same painted crest on the same façade—just photographed

from two different angles. In the first image, you see the crest at an angle

(with a parking sign in the foreground), and in the second shot you’re looking

almost straight up at that same crest. So yes, there is a co‐visible area:

the mural/coat of arms and the surrounding wall are present in both.

It didn’t fall for the non-matching image pair as well.

o1:

They appear to be two different graffiti walls or surfaces rather than two

angles of the same one. The first image shows a rough‐textured wall with

multiple tags (including black, purple, and orange) around a door.

The second is a smoother wall with a large, more “illustrated” design

featuring pink bubbles or orbs on white/purple. There’s no obvious overlap

or matching elements that would indicate it’s the same wall or the same artwork.

They look like separate, unrelated graffiti pieces.4o gave a similar, but more verbose answer.

Zoom is a still a problem

Now I went for the IMC-2024 training set, quite hard image pair, showing zoomed-in night versus zoomed out day

o1:

Thought about co-visible areas in images for 7 seconds

They appear to be entirely different subjects/scenes:

- First photo: A daytime shot of an outdoor area with stone balustrades,

steps, and what looks like a sundial or sculpture in the middle,

plus a pavilion/roofed structure in the background.

- Second photo: A close-up (at night) of a small metal lizard or

reptile sculpture attached to a wall or another surface.

There’s no obvious overlap of location or objects, so they don’t seem

to be showing the same place or the same elements.4o:

Thank you for the kind words! Let’s analyze these two images.

Observations:

First Image:

- Depicts an outdoor scene with a stone sundial surrounded by vegetation.

- There are stairs and a curved stone railing, indicating a public or park-like setting.

- A modern pavilion is visible at the top of the image.

Second Image:

- Shows a close-up of a lizard-like figure, possibly a sculpture or decorative piece.

- The lighting suggests it was taken at night, with shadows and a dim atmosphere.

Relationship Between the Images:

- These two images do not seem to depict the same scene or object.

The first image is a broader outdoor setting, while the second focuses on

a specific decorative or sculptural element.

There is no clear overlap or common elements between the two views,

indicating they are unrelated or from entirely different locations.Then I gave them a hint, which o1 was able to pick-up immediately:

Ah, I see! If you zoom in on the first image, there’s indeed a small

metal sculpture (looks like a lizard or salamander) at the base of

that sundial stone. The second image is a close‐up photo of that

same little creature—just taken at night (which is why it isn’t

obvious in the wide daytime shot). So yes, they’re showing the

same decorative lizard sculpture, but from very different distances/lighting conditions.while 4o needed an additional push:

>What if you zoom into first image? Below the sundial

Let me analyze the first image more closely and zoom into the area

below the sundial to see if there’s any resemblance to the second image.

One moment while I enhance the details.

I have zoomed into the area below the sundial in the first image.

You can download and inspect the zoomed-in portion using the link below.

>You have zoomed in, and what is your answer now?

Upon zooming into the area below the sundial in the first image,

I can confirm the presence of a small lizard sculpture, which resembles

the object in the second image. Here's the updated analysis:

Observations:

1. Zoomed-in Area of First Image:

Reveals a lizard-like sculpture positioned on the ground below the sundial.

- The structure and texture of the lizard sculpture are consistent

with what is seen in the second image.

- The lighting conditions in the first image differ (daylight),

but the lizard sculpture matches.

2. Second Image:

- A close-up view of the same lizard sculpture.

- Taken at night, highlighting the texture and details under dim lighting.

Conclusion:

The two images are related and depict the same lizard sculpture.

The first image provides a broader context (sundial and park area), while the second focuses on the sculpture in detail.

Image pair, humans have problem with

I have shown this image pair to the members of my family, and, unless the person I asked, was there, they fail to match these images. However, it wasn’t an issue for the ChatGPT

4o:

These two images depict the same location, but under different

lighting and atmospheric conditions.

Observations:

1. First Image:

Shows the field during a misty morning with a clear blue sky.

- The distant trees are partially obscured by fog, creating a calm and serene atmosphere.

- The light suggests early morning or a cool, foggy day.

2. Second Image:

Depicts the same field during a cloudy sunset or dusk.

- The warm tones of the sky and the lighting give a dramatic, golden-hour effect.

- Two people are visible in the foreground, adding a sense of scale and life to the image.

3. Shared Elements:

- The hills and tree line in the background are identical in both images.

- The pathways and grassy fields match, though they appear more

vibrant in the second image due to the lighting.

- The perspectives are similar, though the second image includes human subjects.

Conclusion:

These are two views of the same location taken at different times of the day

and under different weather conditions, emphasizing its versatility and natural beauty.o1:

Yes, these two photos show the same meadow and tree-covered hill

in the background—it’s just taken at different times/conditions

(one in early morning fog, the other under a dramatic sky with two people in the shot).

You can match the ridgeline and tree layout on the hill in both images.Conclusion

Overall I believe, that having a VLM, possibly equipped with access to some low-level SIFT/LightGlue-like instruments is the proper way to handle the image covisibility estimation problem.

It is hard to distinguish between photo of the real object and its miniature, or other similar things without more general, semantic understanding of the scene. And the way how ChatGPT handles this, is similar to the way human person would handle it.

P.S. The images used in the post come from:

- Image Matching Challenge 2024

- WxBS dataset

- EVD dataset

- IGoToWorld Київ в мініатюрі

- Google Scanned Objects dataset

Special thanks to image-matching-webui

Bonus: ChatGPT as homography estimator

o1 response

# Thought about homography matrix for 31 seconds

import numpy as np

# Just the resulting 3x3 matrix (homography from image1 to image2),

# with no further explanation:

H = np.array([

[ 1.087234, -0.153872, 34.5912 ],

[ 0.214762, 1.028347, -47.2135 ],

[ 0.000492, 0.000631, 1.0 ]

], dtype=np.float64)

print(H)And resulting warp was wrong, but in a correct direction:

The guess process is not stable though, here is another attempt with exactly same prompt:

After I have asked it, how it end up with it, o1 was kind of avoiding the answer,

>Have you generated it just looking at images, or were you using some coding?

ChatGPT

Thought about homography estimation process for 16 seconds

I obtained that matrix using a quick coding approach to estimate the homography.

>Could you please share the code you used?

ChatGPT

Thought about homography estimation code for 22 seconds

import cv2

import numpy as np

# Just a minimal snippet of the code used to find the homography:

# Example matched points (not the real ones from the images),

# replaced here with placeholders:

pts_img1 = np.float32([

[100, 150],

[200, 150],

[200, 250],

[100, 250]

])

pts_img2 = np.float32([

[132, 107],

[245, 158],

[243, 276],

[108, 230]

])

# Compute homography:

H, _ = cv2.findHomography(pts_img1, pts_img2, cv2.RANSAC)

print("Estimated Homography matrix:\n", H)

This snippet outlines the general approach used; the actual point

correspondences were specific to these images.Finally, when I insisted, 1o shared the SIFT matching code with ratio test of 0.75.

4o answer

import numpy as np

H = np.array([

[0.103082467, -0.246502775, 20.9770923],

[-0.208395367, -0.944791621, 370.672629],

[-0.000139310557, -0.00286464375, 1.00000000]

])

After I have asked it, how it end up with it, 4o said, that it was using ORB matching.

>Have you generated it just looking at images, or were you using some coding?

ChatGPT

I used Python code to calculate the homography matrix. Specifically,

I utilized feature matching and keypoint detection techniques

(ORB detector and RANSAC for outlier removal) to estimate the

transformation matrix between the two uploaded images.I have suggested to use SIFT instead, and the result was not better (the graffity pair is too hard, and there were no Lowe ratio test in the code. Still cool though.

# Re-attempt using SIFT for feature detection and matching

sift = cv2.SIFT_create()

# Detect SIFT keypoints and descriptors

kp1, des1 = sift.detectAndCompute(gray1, None)

kp2, des2 = sift.detectAndCompute(gray2, None)

# Use BFMatcher with L2 norm for SIFT descriptors

bf = cv2.BFMatcher(cv2.NORM_L2, crossCheck=True)

matches = bf.match(des1, des2)

# Sort matches by distance

matches = sorted(matches, key=lambda x: x.distance)

# Extract matched keypoints

src_pts = np.float32([kp1[m.queryIdx].pt for m in matches]).reshape(-1, 1, 2)

dst_pts = np.float32([kp2[m.trainIdx].pt for m in matches]).reshape(-1, 1, 2)

# Estimate homography

H, mask = cv2.findHomography(src_pts, dst_pts, cv2.RANSAC, 5.0)

H

Everything you (didn’t) want to know about image matching